Table of Contents

Additional concepts mentioned in Exam Guide

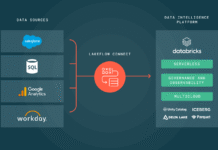

The Lakehouse and Data Warehouse Relationship

A Modern Paradigm

The Data Lakehouse represents an evolution in data architecture, combining the best attributes of a Data Warehouse and a Data Lake. It is not merely a replacement for the data warehouse but rather a new paradigm that builds upon it.

A traditional Data Warehouse stores structured, processed data in a predefined schema, optimized for business intelligence (BI) and reporting. It offers high performance and strong data governance but struggles with unstructured data and can be expensive and inflexible.

A Data Lake, in contrast, stores vast amounts of raw data—structured, semi-structured, and unstructured—in its native format. This provides flexibility and cost-effectiveness for data science and machine learning workloads but often at the cost of data quality, reliability, and performance, leading to what is often called a “data swamp.”

The Data Lakehouse architecture, implemented by platforms like Databricks, runs directly on top of a cloud data lake (e.g., AWS S3, Azure Data Lake Storage). It brings the reliability, strong governance, and performance features of a data warehouse to the data lake. This is achieved through an open-source transactional layer like Delta Lake, which provides ACID transactions, schema enforcement, and time travel capabilities directly on the files in the data lake.

Code snippet

+-----------------------------+ +-----------------------------+

| Data Warehouse | | Data Lake |

|-----------------------------| |-----------------------------|

| + Structured Data | | + All Data Types (Raw) |

| + Schema-on-Write | | + Schema-on-Read |

| + High Performance for BI | | + Flexible for ML/AI |

| + Strong Governance | | + Prone to "Data Swamp" |

+-------------+---------------+ +-------------+---------------+

| |

+-----------------+------------------+

|

+------------v------------+

| Data Lakehouse |

|-------------------------|

| + All Data Types |

| + ACID Transactions |

| + Schema Enforcement |

| + Governance & Security |

| + BI & ML on one copy |

+-------------------------+In essence, the data lakehouse eliminates the need for separate, siloed systems. Data engineers, analysts, and data scientists can all work on the same consistent, up-to-date data, streamlining workflows and reducing data redundancy.

Data Quality: Lakehouse vs. Lake

From Data Swamp to Reliable Data

The primary improvement in data quality in the data lakehouse over the data lake comes from the introduction of a transactional storage layer, such as Delta Lake.

In a standard data lake, data is often stored in formats like Parquet or ORC. If a job writing data to the lake fails midway, it can leave behind corrupted files or partial data, leading to inconsistent and unreliable reads. There is no mechanism to enforce data integrity.

The lakehouse, powered by Delta Lake, solves this by providing ACID (Atomicity, Consistency, Isolation, Durability) transactions.

- Atomicity: Every transaction (e.g., an

INSERT,UPDATE, orDELETE) is treated as a single, all-or-nothing operation. If it fails, the data is left in its previous state, preventing data corruption. - Schema Enforcement: The lakehouse can enforce a predefined schema. If new data being written does not conform to the table’s schema (e.g., wrong data type), the transaction is rejected, preventing data pollution. This is a “schema-on-write” approach, a hallmark of data warehouses.

- Data Validation Constraints: You can define and enforce data quality rules (e.g.,

value IS NOT NULL) directly on tables.

These features ensure that the data within the lakehouse is reliable, consistent, and ready for consumption, transforming the potentially chaotic data lake into a trustworthy source of truth.

Medallion Architecture: Bronze, Silver, and Gold Tables

A Multi-hop Approach to Quality

The Medallion Architecture is a data modeling pattern used to logically organize data in a lakehouse. It progressively improves the structure and quality of data as it flows through different layers, or tables: Bronze, Silver, and Gold.

| Table Type | Description | Data Quality | Users & Workloads |

| Bronze | Raw data ingested from source systems. | Raw, Unvalidated | Data ingestion workloads. Source for Silver tables. |

| Silver | Filtered, cleaned, and augmented data. | Queried, Validated | Business intelligence, data science, and ML feature engineering. Source for Gold tables. |

| Gold | Aggregated data for specific business use cases. | Aggregated, Curated | Business reporting, dashboards, analytics. |

Workload Sources

- Workloads using a Bronze table as a source: These are typically ETL/ELT pipelines responsible for data cleansing, transformation, and enrichment. Their goal is to take the raw data and prepare it for broader use by creating Silver tables. For example, a Spark job might read from a Bronze table of raw JSON logs, parse them, join them with reference data, and write the result to a Silver table.

- Workloads using a Gold table as a source: These are almost always analytics and reporting workloads. Business analysts using BI tools, data scientists building dashboards, or automated reporting systems will query Gold tables to get pre-aggregated, business-level insights. For example, a Tableau dashboard showing weekly sales trends would connect directly to a Gold table.

Databricks Platform Architecture: Control Plane vs. Data Plane

A Hybrid Cloud Model

The Databricks Platform operates on a hybrid architecture, separating its services into a Control Plane and a Data Plane. This design balances centralized management with secure data processing within the customer’s own cloud environment.

- Control Plane: This is the backend infrastructure managed by Databricks in its own cloud account. It houses the services that manage your Databricks workspace, including the web application, notebook management, job scheduler, cluster manager, and metastore. When you interact with the Databricks UI or API, you are communicating with the Control Plane.

- Data Plane: This is where all the data processing happens. The Data Plane resides entirely within the customer’s cloud account (e.g., your AWS or Azure subscription). It contains the Databricks clusters (the virtual machines providing CPU, memory, and storage) and the associated storage (like S3 buckets or ADLS containers) where your data and cluster logs reside.

Code snippet

+------------------------------------------+

| Databricks Cloud Account |

|------------------------------------------|

| CONTROL PLANE |

| + Web App |

| + Notebooks & Workspace Assets |

| + Job Scheduler |

| + Cluster Manager |

| + Metastore (e.g., Unity Catalog) |

+---------------------+--------------------+

|

Secure API & Credentials

|

+---------------------v--------------------+

| Customer Cloud Account |

|------------------------------------------|

| DATA PLANE |

| + Databricks Clusters (VMs) |

| + Data (S3 / ADLS / GCS) |

| + Cluster Logs |

+------------------------------------------+This separation is crucial for security and governance. Your data never leaves your cloud account. The Databricks Control Plane sends commands to start and manage clusters in your Data Plane, but the clusters process your data directly from your storage.

Cluster Types: All-Purpose vs. Jobs

Interactive vs. Automated Workloads

Databricks offers two main types of clusters, each tailored for different use cases.

| Feature | All-Purpose Cluster | Jobs Cluster |

| Purpose | Interactive analysis, data exploration, collaborative development. | Automated, scheduled, production workloads. |

| Creation | Created manually via the UI or API. | Created automatically when a new job run starts. |

| Lifecycle | Persists until manually terminated. Can be shared by multiple users. | Ephemeral. Starts and terminates with the job run. |

| Cost | Higher cost (DBU rate). | Lower cost (DBU rate). |

| Use Case | A data scientist developing a model in a notebook. | A nightly ETL pipeline that runs on a schedule. |

In short, use All-Purpose Clusters for development and exploration, and use Jobs Clusters for production automation to optimize costs.

Cluster Software Versioning: Databricks Runtime

Ensuring Consistency and Performance

The software environment on a Databricks cluster is standardized and versioned using the Databricks Runtime (DBR). The DBR is a pre-packaged collection of components that includes:

- Apache Spark: The core processing engine.

- Optimizations: Databricks-specific performance enhancements like Photon, an vectorized execution engine.

- Common Libraries: Popular Python and Scala libraries for data science and data engineering (e.g., pandas, scikit-learn).

- System Components: Operating system and supporting software.

Each DBR has a version number (e.g., 14.3 LTS). Databricks offers different types of runtimes, such as the standard DBR, the ML DBR (which includes machine learning frameworks like TensorFlow and PyTorch), and the Photon DBR.

By selecting a specific DBR version when creating a cluster, you ensure a consistent, tested, and optimized environment for your code, which eliminates dependency conflicts and makes workloads portable across clusters.

Cluster Management

Filtering and Termination

Filtering Accessible Clusters

In a shared Databricks workspace, the cluster list can become crowded. To view only the clusters you have permission to access or have created, you can use the filter options at the top of the cluster list page. The most common filter is “Accessible by me”, which narrows the list to show:

- Clusters you have created.

- Clusters for which you have been granted explicit permissions (e.g., “Can Attach To”).

Cluster Termination and Its Impact

A cluster can be terminated manually through the UI or API, or automatically based on a configured inactivity timer. Terminating a cluster shuts down and deletes the underlying cloud provider VMs.

The key impacts of termination are:

- State Loss: All ephemeral state stored in the cluster’s memory is lost. This includes cached data and any variables defined in an attached notebook.

- Cost Savings: You stop paying for the compute resources (VMs). This is the primary reason for terminating clusters.

- Detached Notebooks: Any notebooks attached to the cluster are detached. The notebook code itself is preserved in the workspace, but its execution state is gone.

When to Restart a Cluster

Restarting a cluster (which is a terminate followed by a start) is useful in specific scenarios:

- Clearing Cached State: If you suspect that a stale cache is causing unexpected behavior or you want to free up memory without losing your notebook’s variable state.

- Applying Library Changes: If you have installed or updated libraries on a cluster, a restart is often required for the changes to take effect across the entire Spark environment.

- Resolving Driver or Executor Issues: In rare cases, a Spark driver or executor process might enter a hung or unhealthy state. A restart is a direct way to resolve this.

Notebooks Fundamentals

Multi-language, Execution, and Sharing

Using Multiple Languages in a Notebook

Databricks notebooks are polyglot by default. While a notebook has a primary language (e.g., Python), you can execute cells in other languages using magic commands. The supported magic commands are:

%python%sql%scala%r

For example, in a Python notebook, you can run a SQL query directly:

Python

# Main notebook language is Python

df = spark.read.table("my_data")

display(df)SQL

%sql

-- A cell with a SQL magic command

SELECT status, COUNT(*)

FROM my_data

GROUP BY statusRunning a Notebook from Another Notebook

You can execute one notebook from within another using the %run magic command or the dbutils.notebook.run() utility. This is extremely useful for modularizing code.

Using %run: This command runs the specified notebook and makes its defined variables and functions available in the calling notebook’s scope. It’s like a simple include statement.

# In notebook "main_notebook"

%run ./setup_notebook

# Now you can use functions or variables defined in "setup_notebook"

processed_df = process_data(initial_df)Using dbutils.notebook.run(): This method offers more control. It runs the target notebook as a separate job, allows you to pass parameters, and can be run in parallel. It does not share the variable scope.

Sharing Notebooks

Notebooks can be shared with other users or groups within the same Databricks workspace. You can assign different permission levels:

- Can View: The user can see the notebook’s cells and results but cannot edit or run it.

- Can Run: The user can attach the notebook to a cluster and run it.

- Can Edit: The user has full permissions to edit code, run, and manage the notebook.

- Can Manage: The user can change permissions for other users.

Databricks Repos for CI/CD

Git Integration for Production Workflows

Databricks Repos provides Git integration directly within the Databricks workspace. It allows you to connect a workspace folder to a remote Git repository (like GitHub, GitLab, or Azure DevOps). This is the cornerstone of enabling CI/CD (Continuous Integration/Continuous Deployment) workflows for your Databricks projects.

Instead of relying on the limited, linear version history of a standard notebook, Repos enables a professional software development lifecycle:

- Development: Developers clone a remote Git repository into a Databricks Repo, create a new branch, and work on their notebooks and source code.

- Commit & Push: They commit their changes and push the branch to the remote repository.

- Pull Request: They open a pull request (PR) for code review and automated testing.

- CI/CD Automation: A CI/CD pipeline (e.g., GitHub Actions) is triggered. It can run automated tests on the code in the PR.

- Deployment: Once the PR is approved and merged, the pipeline can automatically deploy the updated code to a production environment in Databricks, for example, by updating a Databricks Job to use the new version of the code from the main branch.

Git Operations in Repos

Databricks Repos provides a user-friendly UI for common Git operations:

- Clone: Create a local copy of a remote repository.

- Pull: Fetch and apply changes from the remote repository.

- Commit: Record changes to your local branch.

- Push: Send your committed changes to the remote repository.

- Branch: Create, switch, and manage branches.

- Merge: Merge changes from one branch into another.

Repos vs. Notebook Version History

Standard Databricks notebooks have a built-in version history that automatically saves snapshots as you work. While useful for quick recovery, it has significant limitations compared to Repos.

| Feature | Notebook Version History | Databricks Repos |

| Branching | ❌ | ✅ |

| Code Review | ❌ (Cannot manage pull requests) | ✅ |

| CI/CD Integration | ❌ | ✅ |

| Granularity | Saves the entire notebook state. | Commits specific, meaningful changes. |

| Collaboration | Sequential, difficult for multiple developers. | Parallel development on different branches. |

| Code Management | Limited to notebooks only. | Manages notebooks, Python/Scala files, and any other project assets. |

In summary, notebook version history is a personal safety net, while Databricks Repos is the enterprise-grade solution for team collaboration and production automation.

ELT with Apache Spark

Data Extraction

Reading from Files and Directories

Apache Spark provides a powerful and unified API for reading data from various sources. The primary interface for this is the DataFrameReader (spark.read).

Extracting Data from a Single File

To read a single file, you specify its format and path. For example, to read a CSV file:

# The format method specifies the data source type

df = spark.read.format("csv") \

.option("header", "true") \

.option("inferSchema", "true") \

.load("/path/to/your/single_file.csv")The .format() method is key. Here, "csv" is the prefix that tells Spark which built-in data source connector to use. Other common formats include "json", "parquet", and "delta".

Extracting Data from a Directory of Files

A common scenario in big data is to process a collection of files that share the same schema and format, all located within a single directory. Spark handles this seamlessly. By providing the path to the directory instead of a specific file, Spark will automatically discover and read all the files within it.

# Spark reads all CSV files inside the "events" directory

df = spark.read.format("csv") \

.option("header", "true") \

.load("/path/to/directory/of/events/")This is extremely efficient for processing partitioned data, such as logs generated daily into a new file.

Data Referencing: Views and CTEs

Creating Reusable Logical Pointers

Often, you don’t want to physically write out intermediate data. Instead, you can create a named reference to a transformation or a file path. SQL offers several ways to do this.

Views and Temporary Views

A view is a stored query that can be used like a table. A temporary view is similar but is session-scoped, meaning it is automatically dropped when your Spark session ends. This is the most common type of view used in interactive analysis.

-- Create a temporary view that points to a Parquet file

CREATE OR REPLACE TEMPORARY VIEW sales_view AS

SELECT * FROM parquet.`/path/to/sales/data`;

-- Now you can query the view as if it were a table

SELECT region, SUM(amount)

FROM sales_view

WHERE year = 2024

GROUP BY region;Here, parquet is the data type prefix included after the FROM keyword, telling Spark how to read the underlying data at the given path.

Common Table Expressions (CTEs)

A CTE is a temporary, named result set that exists only for the duration of a single query. It is defined using the WITH clause and helps break down complex queries into logical, readable steps.

-- Use a CTE to filter and then aggregate data

WITH filtered_sales AS (

SELECT * FROM sales_view WHERE region = 'North America'

)

SELECT

product_id,

AVG(amount) as avg_sale

FROM filtered_sales

GROUP BY product_id;Working with External Tables

Tables from Non-Delta Sources

When you create a table in Databricks that points to data stored in a non-Delta format (like CSV, JSON, or Parquet), it is considered an external table. It is crucial to identify that tables from external sources are not Delta Lake tables by default.

This means they lack the key features of Delta Lake:

- No ACID transactions.

- No schema enforcement on writes.

- No time travel capabilities.

Creating an External Table from a CSV File

CREATE TABLE employee_csv (

id INT,

name STRING,

department STRING

)

USING CSV

OPTIONS (

path = "/path/to/employees.csv",

header = "true"

);Creating a Table from a JDBC Connection

You can also create a table that directly connects to an external relational database via JDBC. This allows you to query the external database from within Spark.

CREATE TABLE employees_from_postgres

USING JDBC

OPTIONS (

url = "jdbc:postgresql://dbserver.example.com:5432/employees",

dbtable = "public.employees",

user = "username",

password = "password"

);Queries against this table will be translated and pushed down to the PostgreSQL database.

Data Quality and Cleansing

Counting Nulls and Deduplicating Data

Counting Null Values

Validating data quality often involves checking for missing values.

count_if: This function is a general-purpose way to count rows that satisfy a boolean expression. It is ideal for countingNULLs.SELECT count_if(email IS NULL) AS missing_emails FROM customers;count(column): The standardcount(column_name)aggregate function skipsNULLvalues in the specified column.count(*)orcount(1), on the other hand, counts all rows. You can use this difference to findNULLs.-- If count(*) is greater than count(email), there are NULLsSELECT count(*), count(email) FROM customers;count where x is null: This is a more verbose but equally valid way to express the same logic ascount_if.SELECT count(*) FILTER (WHERE email IS NULL) AS missing_emails FROM customers;

Deduplicating Rows

Duplicate data is a common problem.

Deduplicate an entire row: DISTINCT is the simplest way to remove rows that are complete duplicates.

-- Create a new table without duplicate rows

CREATE OR REPLACE TABLE clean_users AS SELECT DISTINCT * FROM raw_users;Deduplicate based on specific columns: Often, you want to define uniqueness based on a subset of columns (e.g., a primary key). The ROW_NUMBER() window function is a standard pattern for this.

-- Keep only the most recent record for each user_id

CREATE TABLE deduplicated_users AS

SELECT * FROM (

SELECT *, ROW_NUMBER() OVER

(PARTITION BY user_id ORDER BY last_updated_ts DESC) as rn

FROM users_with_duplicates)

WHERE rn = 1;Data Validation Techniques

Enforcing Business Rules

You can use SQL queries to validate business rules and ensure data integrity.

Validate Primary Key Uniqueness: Check if an id column is truly unique. A query that groups by the key and counts should not return any counts greater than 1.

-- Should return an empty result set

SELECT user_id, COUNT(*) FROM users GROUP BY user_id HAVING COUNT(*) > 1;Validate Field Relationships: Check that a field is associated with only one unique value in another field. For example, a zip_code should only belong to one state.

-- This query identifies zip codes mapped to more than one state

SELECT zip_code, count(DISTINCT state) as num_states FROM locations GROUP BY zip_code HAVING num_states > 1;Validate Value Absence: Ensure a specific value is not present in a field. For instance, an order status should never be NULL.

-- This query should return 0

SELECT count(*) FROM orders WHERE status IS NULL;Transforming Data

Casting and String Manipulation

Working with Timestamps

Casting to Timestamp: Raw data often contains dates as strings. You must CAST them to a TIMESTAMP type to perform date/time operations.

SELECT CAST(order_date_string AS TIMESTAMP) AS order_ts FROM sales;Extracting Calendar Data: Once you have a timestamp, you can easily extract parts of it.

SELECT order_ts,

YEAR(order_ts) AS order_year,

MONTH(order_ts) AS order_month,

DAYOFWEEK(order_ts) AS order_day_of_week

FROM orders;Extracting Patterns from Strings

The regexp_extract function allows you to pull substrings that match a regular expression pattern.

-- Extract the domain name from an email address

SELECT

email,

regexp_extract(email, '.*@(.*)', 1) AS domain

FROM users;Handling Complex Data Types

Nested Data, Arrays, and JSON

Extracting Nested Data with Dot Syntax

Modern data sources often use nested structures (structs). Spark allows you to query into these structures using a simple dot syntax.

-- Given a 'user' column with a nested 'address' struct

-- user: { name: "Alice", address: { city: "New York", zip: "10001" } }

SELECT

user.name,

user.address.city

FROM profiles;Parsing JSON Strings

If your data arrives as a JSON string within a table column, you can parse it into a struct using the from_json function. This requires you to provide the schema of the JSON.

SELECT

from_json(json_string_col, 'name STRING, age INT') AS parsed_json

FROM raw_logs;Working with Arrays

Array functions provide powerful capabilities for manipulating lists of items within a row.

explodevs.flatten: These functions are used for nested arrays but serve different purposes.explode: Use this when you want to transform an array into rows. It takes an array and creates a new row for each element in that array. This is a common scenario for un-nesting data.flatten: Use this to collapse an array of arrays into a single array. It does not create new rows.

Reshaping Data

Pivoting from Long to Wide Format

The PIVOT clause is a powerful way to transform data from a long format (where attributes are stored in rows) to a wide format (where attributes are stored in columns). This is common in reporting.

For example, to transform monthly sales data:

-- Long format source table

-- month | category | sales

-- Jan | Books | 1000

-- Jan | Music | 500

-- Feb | Books | 1200

SELECT * FROM (

SELECT month, category, sales

FROM monthly_sales

)

PIVOT (

SUM(sales)

FOR month IN ('Jan', 'Feb', 'Mar')

);

-- Resulting wide format

-- category | Jan | Feb | Mar

-- Books | 1000 | 1200 | ...

-- Music | 500 | ... | ...

Extending SQL with User-Defined Functions (UDFs)

Custom Logic in SQL

A SQL User-Defined Function (UDF) allows you to define a reusable piece of logic in SQL that can be invoked like any built-in function.

Defining a SQL UDF

-- Define a function to convert temperature from Celsius to Fahrenheit

CREATE OR REPLACE FUNCTION an_catalog.a_schema.c_to_f(celsius DOUBLE)

RETURNS DOUBLE

RETURN celsius * 1.8 + 32;

-- Use the function in a query

SELECT reading_date, c_to_f(temp_celsius) AS temp_fahrenheit

FROM weather_readings;

Location and Security of UDFs

- Location: In Unity Catalog, a function is a securable object located within the three-level namespace:

catalog.schema.function_name. - Security Model: You can grant permissions on UDFs to other users or groups. The key permission is

EXECUTE, which allows a user to call the function. This enables you to share business logic securely without exposing the underlying implementation. A user only needsEXECUTEon the function andSELECTon the table to use it.

Conditional Logic with CASE/WHEN

Custom Control Flow in SQL

The CASE/WHEN expression is SQL’s standard way of implementing conditional (if/then/else) logic within a query. It allows you to create custom control flow for transforming data or creating new columns based on specific conditions.

-- Categorize customers based on their number of purchases

SELECT

customer_id,

num_purchases,

CASE

WHEN num_purchases > 50 THEN 'Loyal'

WHEN num_purchases > 10 THEN 'Active'

ELSE 'New'

END AS customer_segment

FROM customer_summary;

This is fundamental for implementing business rules directly within your ELT transformations.

Incremental Data Processing

Delta Lake Fundamentals: ACID Transactions

Bringing Reliability to the Data Lake

Delta Lake is an open-source storage layer that brings reliability to data lakes by providing ACID transactions. It provides these guarantees for all operations, whether it’s a single-row MERGE or a massive batch OVERWRITE.

The benefits of ACID transactions in a big data context are immense:

- Reliability: Failed write jobs do not corrupt your data. The transaction is atomic, so the table is left in its previous, consistent state.

- Data Integrity: Schema enforcement and constraints prevent bad data from being written to your tables.

- Concurrency: Multiple readers and writers can operate on the same table simultaneously without interfering with each other (Isolation). Readers see a consistent snapshot of the data.

An operation is ACID-compliant in Delta Lake if it is managed through the Delta protocol. Any standard DML (INSERT, UPDATE, DELETE, MERGE) or DDL (CREATE TABLE) on a Delta table is ACID-compliant. Directly manipulating Parquet files in a Delta table’s directory using non-Delta tools would not be ACID-compliant and would corrupt the table.

Understanding Delta Lake Tables

Data, Metadata, Managed, and External

A Delta Lake table consists of two main parts:

- Data: The actual data, stored in Parquet files in a directory in cloud storage.

- Metadata: The transaction log, stored in a subdirectory named

_delta_log. This log contains a series of JSON files that are the single source of truth for the table’s state. It tracks every change, enabling ACID transactions and time travel.

H3 Managed vs. External Tables

| Feature | Managed Table | External Table |

| Location | Data and metadata are stored in a location managed by the metastore. | Data is stored in a location you specify; only metadata is managed by the metastore. |

DROP TABLE | Drops both the data and the metadata. The data is deleted. | Drops only the metadata from the metastore. The underlying data remains untouched in your storage. |

| Use Case | Default choice for most tables. Easy to manage. | Use an external table when you need to share data with other systems or want to manage data deletion independently of the table definition. |

Creating a Managed Table

A managed table is created when you do not specify a LOCATION.

CREATE TABLE managed_sales (

id INT,

amount DOUBLE

) USING DELTA;The location of this table’s data and metadata will be in the default database path managed by Databricks.

Time Travel and Auditing

Querying the Past

The Delta Lake transaction log (_delta_log) is an ordered record of every transaction ever committed to the table. This log enables powerful auditing and data versioning features.

Inspecting Table History

Directory Structure: If you inspect the directory of a Delta table, you will see the Parquet data files and the _delta_log folder containing JSON files (00000.json, 00001.json, etc.) for each commit.

Reviewing History: The DESCRIBE HISTORY command lets you review the table’s transaction history, including the version number, timestamp, operation type, and who has written previous versions of the table.

DESCRIBE HISTORY my_delta_table;Rolling Back and Querying Versions

Delta Lake allows you to easily roll back a table to a previous state or query a snapshot of the table as it existed at a specific point in time.

Rollback: You can roll back a table to a previous version. This is a powerful feature for recovering from errant data writes.

-- This creates a new commit that reverts the table's state

RESTORE TABLE my_delta_table TO VERSION AS OF 5;Query a Specific Version: You can query a snapshot of the data without performing a full rollback using the VERSION AS OF syntax. This is invaluable for debugging, auditing, or creating reproducible ML experiments.

SELECT * FROM my_delta_table VERSION AS OF 10;Performance Optimization

Z-Ordering and Compaction

Z-Ordering

Z-Ordering is a technique for co-locating related information in the same set of files. It is a form of multi-dimensional clustering. If you frequently filter a table by certain columns (e.g., WHERE region = 'NA' AND status = 'SHIPPED'), you can Z-Order by those columns.

OPTIMIZE my_delta_table ZORDER BY (region, status);This is beneficial because it allows Spark to read less data. When a query with a filter on a Z-Ordered column is executed, Spark can use the file statistics to skip files that do not contain relevant data, dramatically improving query performance.

OPTIMIZE and VACUUM

OPTIMIZE: Over time, many small transactions can lead to a large number of small files. TheOPTIMIZEcommand compacts these small files into fewer, larger files. This is more efficient for Spark to read.VACUUM: When you delete or update data in Delta Lake, the old data files are not immediately deleted.VACUUMis the command that commits these deletes by removing data files that are no longer referenced by the transaction log and are older than a retention threshold (default 7 days).

Schema Management and Table Operations

Evolving Tables Gracefully

CTAS and Generated Columns

CTAS (Create Table As Select): This is a common and convenient solution for creating and populating a table in a single step.

CREATE TABLE aggregated_sales AS SELECT region, SUM(amount) FROM raw_sales GROUP BY region;Generated Columns: These are special columns whose values are automatically generated based on other columns in the table. This is useful for automatically creating partition columns.

CREATE TABLE events ( event_id LONG, event_ts TIMESTAMP, event_date DATE GENERATED ALWAYS AS (CAST(event_ts AS DATE)) ) PARTITIONED BY (event_date);Adding Comments and Overwriting Data

Table Comments: You can add a comment to a table to describe its purpose.

COMMENT ON TABLE sales IS 'This table contains daily sales transactions.';CREATE OR REPLACE TABLE vs. INSERT OVERWRITE: Both operations replace the data in a table, but they have a key difference.

| Command | Behavior |

CREATE OR REPLACE TABLE | Replaces the entire table, including the schema and properties. If the new query has a different schema, the table schema will be changed. |

INSERT OVERWRITE | Atomically replaces only the data in the table. It requires the new data to be compatible with the existing table’s schema. |

Upserting and Deduplication with MERGE

The Power of MERGE

The MERGE command is one of the most powerful features of Delta Lake. It allows you to perform “upsert” operations—updating existing rows and inserting new rows in a single, atomic transaction.

A scenario in which MERGE should be used is when you need to synchronize a target table with a source of new or updated data, like processing daily change data from a transactional database.

The benefits of MERGE are significant:

- Atomicity: The entire operation is a single transaction.

- Efficiency: It avoids complex multi-step logic (e.g.,

INSERTthenUPDATE). - Deduplication:

MERGEcan be used as a command to deduplicate data upon writing by defining theONcondition based on a primary key. If a row from the source already exists in the target, you can choose toUPDATEit or ignore it.

Efficient Data Ingestion with COPY INTO

Idempotent and Scalable Ingestion

COPY INTO is a SQL command designed for idempotent, incremental data ingestion from file-based sources. A scenario where COPY INTO should be used is when you have files continuously arriving in a cloud storage location and you need to load them into a Delta table efficiently without writing duplicate data.

COPY INTO avoids duplicating data because it keeps track of which files have already been processed. It will not re-ingest a file it has already seen, making the ingestion process idempotent.

COPY INTO my_delta_table

FROM '/path/to/source/files'

FILEFORMAT = CSV

COPY_OPTIONS ('force' = 'false');Delta Live Tables (DLT) Pipelines

Declarative ETL

Delta Live Tables (DLT) is a framework for building reliable, maintainable, and testable data processing pipelines.

To create a new DLT pipeline, the necessary components are:

- A pipeline name.

- A target schema or catalog where the resulting tables will be published.

- Notebook libraries containing the pipeline’s source code (in SQL or Python).

Triggered vs. Continuous Pipelines

| Feature | Triggered Pipeline | Continuous Pipeline |

| Execution | Runs once when triggered, processes all available data, then shuts down the cluster. | Runs continuously, ingesting new data as it arrives with low latency. The cluster remains active. |

| Cost | Lower cost, as compute is only used during the run. | Higher cost, as compute is always on. |

| Latency | Higher latency between runs. | Low latency. |

Auto Loader in DLT

Auto Loader is a feature used within DLT (and outside of it) for scalable, incremental data ingestion from cloud storage. A DLT pipeline is utilizing Auto Loader if its source definition uses cloud_files().

A scenario where Auto Loader is beneficial is when you are ingesting a large number of small files from a source like IoT sensors or application logs. It efficiently discovers new files without having to list the entire directory, a process known as “directory listing,” which can be slow and expensive.

If Auto Loader has inferred all data to be STRING from a JSON source, it is likely because it has not been configured with schema inference enabled or has not been provided with an explicit schema. It defaults to STRING to avoid data loss if it cannot reliably determine the type.

Data Quality with DLT

Enforcing Constraints

DLT allows you to define data quality expectations, or constraints, directly in your pipeline code.

The default behavior of a constraint violation is to record the failing records in the event log and add them to a special quarantine table, but allow the pipeline to continue processing valid data.

You can change this behavior:

ON VIOLATION DROP ROW: Rows that violate the constraint will be silently dropped.ON VIOLATION FAIL UPDATE: The entire pipeline run will fail if any row violates the constraint.

Understanding Change Data Capture (CDC)

Processing Row-level Changes

Change Data Capture (CDC) is the process of tracking and capturing changes made to data in a source system. DLT simplifies CDC pipelines with the APPLY CHANGES INTO statement.

This statement takes a source of change data (with columns indicating the operation type, e.g., INSERT, UPDATE, DELETE) and automatically applies these changes to a target Delta table, handling out-of-order data and simplifying complex CDC logic.

DLT Pipeline Observability and Troubleshooting

The Event Log

The DLT event log is the single source of truth for everything that happens in a pipeline. You can query the event log to:

- Get metrics on data quality and pipeline performance.

- Perform audit logging to see who did what and when.

- Examine data lineage to understand how tables are derived.

Troubleshooting DLT Syntax

- Identifying Errors: The DLT UI and event log will clearly indicate which notebook in a DLT pipeline produced an error.

LIVEKeyword: A common syntax error is forgetting theLIVEkeyword. In DLT, you must refer to other tables within the pipeline asLIVE.table_name. Forgetting this leads to theCREATEstatement failing.STREAMKeyword: Another common error is forgetting to read from a streaming source using theSTREAM()function in theFROMclause (e.g.,FROM STREAM(LIVE.bronze_table)). This is required when performing a streaming read between tables in a DLT pipeline.

Production Pipelines

Orchestrating Workflows with Databricks Jobs

Building Robust, Multi-Task Pipelines

A Databricks Job is the primary tool for orchestrating production workloads. One of the key benefits of using multiple tasks in a single job is the ability to create complex, dependent workflows. This allows you to break down a large process into logical, modular, and reusable units. For example, one task can ingest data, a second can transform it, and a third can run quality checks.

Setting up Predecessor Tasks

You can easily set up a predecessor task in the Jobs UI by dragging a connector from one task to another. This creates a dependency, ensuring that the downstream task will only run if the upstream (predecessor) task completes successfully.

A scenario in which a predecessor task should be set up is a classic ETL workflow. A task that creates a silver table should be a predecessor to the task that aggregates that data into a gold table. This guarantees that the gold table is not built from stale or incomplete data.

Code snippet

+----------------+ +----------------+ +----------------+

| Ingest Task |----->| Transform Task |----->| Analytics Task |

| (Bronze Table) | | (Silver Table) | | (Gold Table) |

+----------------+ +----------------+ +----------------+Scheduling and Monitoring Jobs

Automation and Observability

Reviewing Execution History

The Jobs UI provides a detailed history of every run for a job. For each run, you can review a task’s execution history, which includes:

- Start and end times.

- Duration.

- The cluster used.

- Links to Spark UI logs and driver logs for debugging.

- The exact notebook version that was run.

Scheduling with CRON

You can schedule a job to run automatically using standard CRON syntax. This provides a flexible and powerful way to define schedules, such as “run at 2:15 AM every weekday.” This is a fundamental opportunity for automating production pipelines.

Error Handling and Alerting

Building Resilient Pipelines

Debugging and Retrying

- Debug a failed task: To debug a failed task, you start by examining the error message in the job run’s output. For more detail, you can click through to the Spark driver logs, which will contain the full stack trace and error messages from your code.

- Set up a retry policy: The Jobs UI allows you to set up a retry policy in case of failure. You can configure a task to automatically retry a certain number of times with a specified delay between retries. This is highly effective for handling transient failures, such as temporary network issues.

Alerts

You can create an alert for a job to be notified of important events, most commonly a failed task. This alert can be configured to be sent to one or more destinations. A key capability is that an alert can be sent via email to specified recipients, ensuring that operations teams are immediately notified when a production job fails.

Data Governance

Pillars of Data Governance

A Comprehensive Framework

Data governance is a broad discipline that ensures data is managed as a strategic asset. While it can be broken down in many ways, one common framework identifies four core areas of data governance:

- Data Quality: Ensuring data is accurate, complete, and consistent.

- Data Security & Access Control: Protecting data from unauthorized access and defining who can do what with which data.

- Data Discovery & Lineage: Making data easy to find and understanding its origin and transformations.

- Compliance & Privacy: Managing data according to legal and regulatory requirements (e.g., GDPR, CCPA).

Unity Catalog is Databricks’ solution for data governance, providing features that address all these areas.

Unity Catalog (UC) Architecture

Unifying Governance Across Workspaces

Metastores and Catalogs

- Metastore: The metastore is the top-level container for all metadata in Unity Catalog. It lives at the account level and can manage data assets across multiple workspaces. A key best practice is to have only one metastore per region and to colocate this metastore with the workspaces it will serve to minimize network latency.

- Catalog: A catalog is the first layer of the three-level namespace (

catalog.schema.table) and is used to organize data assets. A common best practice is the segregation of business units across catalogs. For example, you might have separate catalogs forfinance,sales, andhuman_resources.

Unity Catalog Security Model

Securables, Principals, and Cluster Modes

Unity Catalog Securables

A securable in Unity Catalog is any object on which permissions can be granted. This includes:

- Metastore

- Catalog

- Schema

- Table / View

- Function

- External Location

- Storage Credential

Service Principals

A service principal is an identity used for automated tools, jobs, and applications. It is essentially a “robot user.” A key best practice is using service principals for connections from automated systems (like production jobs or external applications) to Databricks. This avoids using personal user credentials, which is more secure and easier to manage.

Cluster Security Modes

To interact with data in Unity Catalog, a cluster must be configured with a compatible security mode. The compatible modes are:

- Shared: Can be used by multiple users. Enforces user-level isolation.

- Single User: Tied to a specific user. Recommended for most individual workflows.

The “No isolation shared” mode is a legacy mode and is not compatible with Unity Catalog.

Setting Up a Unity Catalog Environment

Creating Clusters and Warehouses

Creating a UC-enabled Cluster

To create a UC-enabled all-purpose cluster, you must:

- Select a Databricks Runtime version that supports Unity Catalog.

- Choose either “Single User” or “Shared” from the “Access mode” dropdown.

- Ensure the users who will use the cluster have the

USAGEprivilege on the catalogs they need to access.

Creating a DBSQL Warehouse

A Databricks SQL Warehouse is a compute resource optimized for SQL analytics and BI workloads. To create one, you navigate to the SQL editor, choose “Create SQL Warehouse,” and configure its size (T-shirt sizing) and auto-scaling properties. All SQL Warehouses are Unity Catalog-enabled by default.

Access Control in Unity Catalog

The Three-Layer Namespace

Unity Catalog uses a three-layer namespace to query data: catalog.schema.table. To query a table, a user or principal needs the USAGE privilege on the parent catalog and schema, and the SELECT privilege on the table itself.

-- Querying a table using the full three-level namespace

SELECT * FROM finance_catalog.invoices_schema.transactions_table;To implement data object access control, you use standard SQL GRANT and REVOKE commands.

-- Grant read access on a table to the finance group

GRANT SELECT ON TABLE finance_catalog.invoices_schema.transactions_table TO `finance-group`;

-- Grant the ability to create new tables within a schema

GRANT CREATE TABLE ON SCHEMA finance_catalog.invoices_schema TO `data-engineers`;This provides a familiar, SQL-based model for managing fine-grained permissions on your data assets.