Table of Contents

A Guide to Lakeflow Declarative Pipelines and Materialized Views

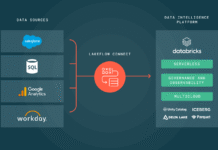

This chapter provides a detailed guide to building modern, reliable data pipelines in Databricks. We will cover the declarative model of Delta Live Tables (DLT) and the on-demand acceleration capabilities of Databricks SQL Materialized Views.

The Shift to Declarative Pipelines

Lakeflow Declarative Pipelines is the current umbrella framework for what the platform has long called Delta Live Tables (DLT). While the official name has evolved, the acronym DLT remains deeply embedded in the UI, API paths, and catalog names, so most engineers still refer to “DLT pipelines.”

Naming Shift at a Glance

| Old Name | New Name | Still Visible in Code/UI |

| Delta Live Tables pipeline | Lakeflow Declarative Pipeline | ✅ |

LIVE virtual schema | Legacy publishing mode | ✅ (deprecated) |

Building Pipelines with Delta Live Tables

DLT is a framework where you define the end state of your data, and the framework manages the orchestration, infrastructure, and updates.

Core DLT Objects

LIVE TABLE: A managed table whose refresh cadence is controlled by the pipeline schedule.CREATE OR REFRESH LIVE TABLEperforms incremental processing when the upstream source supports it (e.g., another DLT table or a source read withcloud_files()). It is not a full rebuild on every run.STREAMING LIVE TABLE: This adds Structured Streaming semantics for near real-time processing. It executes effectively only on Unity Catalog-backed clusters or in Databricks SQL.

Ensuring Data Quality with Constraints

The CONSTRAINT keyword is used to define data quality expectations. The syntax is: CONSTRAINT <name> EXPECT (<boolean-expr>) ON VIOLATION <action>.

FAIL UPDATE: Aborts the batch if any row violates the rule.DROP ROW: Silently discards rows that violate the rule.

Visualizing the DLT Data Flow

This diagram illustrates a typical multi-hop pipeline using DLT objects.

+-------------+ +-------------------+

Raw files -> cloud_files() ─┐ │ STREAMING │

│ │ LIVE TABLE │

▼ ▼ │

+-----------+ │

│ Bronze LT │─────┐ │

+-----------+ │ │

│ │ Incremental joins

▼ ▼

+-----------+ +-----------+

│ Silver LT │ │ MV (in DLT)|

+-----------+ +-----------+

▲

│ SQL dashboard queries

Example DLT Multi-Hop Pipeline

This SQL code defines a two-step DLT pipeline that ingests raw JSON events and cleans them.

-- Bronze Layer: Ingest raw event data incrementally from cloud storage

CREATE STREAMING LIVE TABLE bronze_events (

CONSTRAINT valid_event_id EXPECT (event_id IS NOT NULL) ON VIOLATION DROP ROW

)

COMMENT 'Raw, unprocessed IoT events ingested from cloud storage.'

AS SELECT * FROM STREAM cloud_files(

"/mnt/landing/iot_events/", "json",

map("cloudFiles.schemaLocation", "/path/to/schemas/bronze_events")

);

-- Silver Layer: Clean the raw events, reading from the live bronze table

CREATE STREAMING LIVE TABLE silver_events (

CONSTRAINT valid_timestamp EXPECT (event_timestamp > '2020-01-01') ON VIOLATION FAIL UPDATE

)

COMMENT 'Cleaned and validated IoT events.'

AS SELECT

event_id,

CAST(event_timestamp AS TIMESTAMP) AS event_timestamp,

device_id,

payload

FROM LIVE.bronze_events;

On-Demand Acceleration with Databricks SQL Materialized Views

Definition

A Materialized View (MV) in Databricks SQL is a Unity-Catalog table that stores the pre-computed result of a query. Unlike ordinary views that re-run their query every time they’re read, an MV refreshes only when you ask it to (manually, on a schedule, or during creation). Databricks spins up a short-lived serverless Lakeflow pipeline to do that work and then tears it down, so compute is consumed on demand rather than 24/7.

The Four-Step Lifecycle

Create

A serverless pipeline is silently provisioned to run the first load.

CREATE OR REPLACE MATERIALIZED VIEW sales_by_day

AS

SELECT date, SUM(amount) AS total

FROM delta_catalog.raw_sales

GROUP BY date;Refresh

An MV can be refreshed manually or on a schedule. The refresh is incremental when the sources are Delta tables with Change Data Feed (CDF) enabled.

-- Manual Refresh

REFRESH MATERIALIZED VIEW sales_by_day;

-- Scheduled Refresh on Creation

CREATE OR REPLACE MATERIALIZED VIEW sales_by_day

SCHEDULE CRON '0 0 5 * * ?' -- Every day at 5 AM

AS SELECT date, SUM(amount) AS total FROM delta_catalog.raw_sales GROUP BY date;Query

If the Databricks query optimizer sees that an MV fully satisfies an incoming user query, it automatically rewrites the query plan to read the cached data from the MV instantly.

User SQL ▶︎ Query Optimizer

│ (rewrite if MV matches)

▼

sales_by_day ──▶ resultsDrop

DROP MATERIALIZED VIEW sales_by_day;Why It’s Called “On-Demand Acceleration”

- Compute: Instantiated only for create/refresh. There are no long-running clusters.

- Storage: Stored as Delta files in a managed location.

- Latency: Sub-second for reads since the data is cached.

- Cost: DBUs are charged per refresh minute, with zero cost while idle.

When to Choose a Materialized View

- Dashboard acceleration: For heavy group-bys or joins reused by BI tools.

- Simple periodic ETL: For daily roll-ups without the overhead of a full DLT pipeline.

- Lightweight data masking: Define a view that omits or masks sensitive columns and refresh on demand.

Do not choose an MV for streaming ingest (< 1 min latency) or for complex, multi-step DAGs with data quality rules—use DLT Live Tables for those scenarios.

Comparison of Databricks Relational Objects

| Feature | Live Table (DLT) | Streaming Live Table | Materialized View (SQL) | Standard Delta Table | Temp View |

| Managed by pipeline engine | ✅ | ✅ | ✅ (Serverless) | ❌ | ❌ |

| Physically materialized | ✅ | ✅ | ✅ | ✅ | ❌ |

| Incremental refresh | ✅ | ✅ | ✅ | ❌ | ❌ |

| Supports streaming ingest | ❌ | ✅ | ❌ | ❌ | ❌ |

| CONSTRAINT enforcement | ✅ | ✅ | ❌ | ❌ | ❌ |

| Lifetime | Until dropped | Until dropped | Until dropped | Until dropped | Session |

Comparing Views in Databricks

The main difference between these entities is that a Materialized View physically stores its query results for fast performance, while standard and temporary Views are just saved queries that re-run against the source tables every time they are queried.

This table breaks down the key differences between the three types of views available in Databricks.

| Feature | Standard View | Temporary View | Materialized View (MV) |

| Definition | A saved SQL query stored permanently in the data catalog. | A saved SQL query that exists only for the current session. | A table that stores the pre-computed results of a query. |

| Physically Stores Data? | ❌ No | ❌ No | ✅ Yes |

| Query Performance | Slower (re-runs query) | Slower (re-runs query) | Very Fast (reads cached results) |

| Data Freshness | Always live | Always live | Stale (as fresh as the last REFRESH) |

| Persistence | Permanent (until dropped) | Session-scoped | Permanent (until dropped) |

| Primary Use Case | Simplifying complex queries; providing secure access. | Naming intermediate steps in a single notebook analysis. | Accelerating BI dashboards and frequently used, heavy queries. |

Key Takeaways

- Choose a Standard View when you need a permanent, simplified way to look at live data without storing it twice.

- Choose a Temporary View for private, intermediate steps within a single notebook session that you don’t need to save.

- Choose a Materialized View when query performance is critical, and you are willing to trade live data for speed by refreshing the results on a schedule.

Materialized Views vs. Table Types

The key difference between a Materialized View (MV) and other table types in Databricks lies in its management and refresh lifecycle. A Materialized View is a special type of managed table whose contents are automatically and incrementally refreshed by Databricks based on a schedule, while other tables are either updated manually or managed by a more comprehensive Delta Live Tables (DLT) pipeline.

This table compares a Databricks SQL Materialized View with the table types found in DLT and standard Delta tables.

| Feature | Materialized View (SQL) | Live Table (DLT) | Streaming Live Table (DLT) | Standard Delta Table |

| Management & Updates | Automatically managed and refreshed by Databricks SQL on a schedule. | Managed and refreshed as part of a DLT pipeline run. | Managed and refreshed continuously by a DLT pipeline. | Manually managed via user-run DML (INSERT, MERGE). |

| Update Mechanism | REFRESH or SCHEDULE | DLT Pipeline Trigger (Scheduled or Manual) | Continuous Micro-batches | Ad-hoc or scheduled DML commands |

| Data Freshness | Stale (as fresh as the last scheduled refresh) | Stale (as fresh as the last pipeline run) | Near real-time (seconds to low minutes) | Stale (as fresh as the last manual update) |

| Primary Use Case | Accelerating BI dashboards with simple, scheduled aggregations. | Building incremental batch transformations within a pipeline. | Ingesting and transforming streaming data within a pipeline. | The foundational storage entity in the Lakehouse. |

| Primary Interface | Databricks SQL Editor | DLT Pipeline (Notebook or SQL file) | DLT Pipeline (Notebook or SQL file) | Notebooks, Jobs, SQL Editor |

Key Takeaways

- Choose a Materialized View when you need to accelerate a specific, performance-critical query for BI or reporting and want a simple, schedule-based refresh managed by Databricks SQL without the overhead of a full DLT pipeline.

- Choose a DLT Live Table or Streaming Live Table when you are building a multi-step data pipeline with dependencies between datasets and need the robust orchestration, data quality, and monitoring features provided by the Delta Live Tables framework.

- Choose a Standard Delta Table as your foundational storage. It offers the most flexibility, as it is the target for all other processes and can be managed directly with custom jobs and DML operations.

Materialized View vs. Live Table

The table type closest to a Materialized View (MV) is a Live Table (DLT). Both are materialized, meaning they physically store data, and both are designed to be incrementally refreshed from their source queries.

However, they differ fundamentally in their management, purpose, and execution context.

| Feature | Materialized View (SQL) | Live Table (DLT) |

| Primary Purpose | On-demand query acceleration for BI. | Building blocks for multi-step ETL/ELT pipelines. |

| Management Context | Managed by Databricks SQL via a simple SCHEDULE. | Managed by the Delta Live Tables engine as part of a larger pipeline DAG. |

| Complexity | Designed for single, simple transformations. | Designed for complex, multi-hop dependencies with data quality rules. |

| Compute Model | Refreshes using on-demand, serverless compute. | Refreshes using a DLT-specific cluster configured by the user. |

In short, use a Materialized View for a simple, scheduled refresh of a single query to speed up a dashboard. Use a Live Table when that query is one step in a larger, orchestrated data pipeline with dependencies and data quality checks.

Handling Change Data Capture (CDC) and Data Evolution

A primary challenge in data warehousing and analytics is keeping data synchronized with source systems that are constantly changing. Change Data Capture (CDC) is a design pattern used to track and process these changes—inserts, updates, and deletes—from a source system, typically a transactional database. This chapter explores how Databricks, specifically Delta Live Tables (DLT), provides powerful, declarative tools for handling CDC data and managing data evolution over time.

Implementing CDC with Delta Live Tables

While a traditional MERGE statement can handle CDC in batch jobs, Delta Live Tables offers a specialized, declarative command for streaming CDC data: APPLY CHANGES INTO.

The APPLY CHANGES INTO Command

This command is specific to DLT pipelines and is the standard way to process a stream of change records into a target Delta table. It simplifies the complex logic of identifying inserts, updates, and deletes into a single, easy-to-understand statement.

The source for a STREAMING LIVE TABLE must be append-only. APPLY CHANGES INTO respects this by consuming an append-only stream of change events. The DLT framework then correctly applies these insert, update, and delete events to the target table, which itself is not append-only.

Anatomy of the APPLY CHANGES INTO Command

KEYS: Specifies one or more columns that serve as the primary key to match records between the source stream and the target table.APPLY AS DELETE WHEN: This is an optional clause that defines the condition for deleting a record. You provide a column and value from the source stream that signals a delete operation (e.g.,operation = 'DELETE'). If this clause is omitted, no deletes are processed.SEQUENCE BY: This crucial clause specifies a column used to order the change events, such as a timestamp or a logical sequence number. DLT uses this key to handle out-of-order data correctly, ensuring that a late-arriving, older update does not overwrite a newer version of a record.COLUMNS * EXCEPT (...): This allows you to specify which columns from the source stream should be included in the target table, typically excluding metadata columns like theoperationandsequence_idfields.

SQL Example

This example defines a streaming live table that applies a CDC feed from a bronze table to a silver table.

CREATE STREAMING LIVE TABLE customers_silver

COMMENT 'Cleaned customer data with updates and deletes applied.'

AS APPLY CHANGES INTO LIVE.customers_silver

FROM STREAM(LIVE.customers_bronze)

KEYS (customer_id)

APPLY AS DELETE WHEN operation = 'DELETE'

SEQUENCE BY update_timestamp

COLUMNS * EXCEPT (operation, update_timestamp, _rescued_data);Slowly Changing Dimensions (SCD)

A Slowly Changing Dimension (SCD) is a common data warehousing concept for managing the history of data in dimension tables. DLT’s APPLY CHANGES INTO command has built-in support for the two most common types.

SCD Type 1: Overwrite History

In an SCD Type 1 dimension, new data simply overwrites the old data. No historical record is kept. This is the default behavior of the APPLY CHANGES INTO command shown above. When an update for an existing key arrives, the record is overwritten.

SCD Type 2: Track History

In an SCD Type 2 dimension, changes are tracked by creating new rows for updated records and marking old rows as inactive. This preserves the full history of the dimension. This requires additional columns in the target table, such as is_current (boolean) and date ranges (effective_from, effective_to).

DLT simplifies this process with the TRACK HISTORY ON clause.

SCD Type 2 SQL Example

This example shows how to automatically manage an SCD Type 2 dimension using DLT. DLT will automatically manage the is_current and date range columns.

CREATE STREAMING LIVE TABLE customers_scd2

COMMENT 'Customer dimension with full history preserved (SCD Type 2).'

AS APPLY CHANGES INTO LIVE.customers_scd2

FROM STREAM(LIVE.customers_bronze)

KEYS (customer_id)

SEQUENCE BY update_timestamp

COLUMNS * EXCEPT (operation, update_timestamp)

TRACK HISTORY ON customer_id;STREAMING LIVE TABLE: Standard vs. APPLY CHANGES

The CREATE OR REFRESH STREAMING LIVE TABLE statement can be used in two primary ways, depending on the nature of your source data. The key difference is that the standard form is used for append-only streams, while the AS APPLY CHANGES INTO variant is specifically designed for processing streams that contain updates and deletes, commonly known as a Change Data Capture (CDC) feed.

Standard STREAMING LIVE TABLE

This is the most common form, used to ingest and transform data from an append-only source. Every record from the source stream is treated as a new row to be inserted into the target live table. This pattern is ideal for sources like application logs, IoT sensor data, or any event stream where records represent new, immutable facts.

Syntax:

The defining clause is AS SELECT ... FROM STREAM ....

SQL Example:

This example reads a stream of raw JSON logs from a cloud storage location and creates a streaming live table. Each new log file that appears will be processed as new rows appended to the bronze_logs table.

CREATE STREAMING LIVE TABLE bronze_logs

COMMENT 'Append-only stream of raw application logs.'

AS SELECT

*,

_metadata.file_path AS source_file

FROM STREAM cloud_files('/path/to/application_logs/', 'json');STREAMING LIVE TABLE with APPLY CHANGES INTO

This form is specifically designed to handle a Change Data Capture (CDC) stream. The source stream is expected to contain not just new records, but also records that signify updates to existing rows and deletions. This command declaratively handles the complex logic of applying these inserts, updates, and deletes to a target table to keep it synchronized with a source system.

Syntax:

The defining clause is AS APPLY CHANGES INTO ....

SQL Example:

This example processes a CDC feed from a customers_bronze_cdc table, which contains an operation column (‘INSERT’, ‘UPDATE’, ‘DELETE’) and a customer_id key.

CREATE STREAMING LIVE TABLE customers_silver

COMMENT 'Stateful customer table updated with a CDC feed.'

AS APPLY CHANGES INTO LIVE.customers_silver

FROM STREAM(LIVE.customers_bronze_cdc)

KEYS (customer_id)

APPLY AS DELETE WHEN operation = 'DELETE'

SEQUENCE BY update_timestamp;Key Differences Summarized

| Feature | Standard STREAMING LIVE TABLE | With APPLY CHANGES INTO |

| Input Data Type | Append-only stream of new records. | Stream of change events (inserts, updates, deletes). |

| Output Behavior | Appends new rows to the target table. | Applies inserts, updates, and deletes to the target table. |

| Primary Use Case | Ingesting new events (e.g., logs, IoT data). | Synchronizing a table with a source transactional database. |

| Required Clauses | AS SELECT ... FROM STREAM ... | AS APPLY CHANGES INTO ... KEYS (...) |

Declaring a STREAMING LIVE TABLE without a Query

You can create a streaming live table without an AS clause that defines its source. This method is used to declare an empty streaming table, defining its schema without immediately associating it with a data source. Its purpose is to create a target table that will be populated by one or more separate DLT statements later in the pipeline, typically using INSERT INTO.

The Use Case: Decoupling and Multiple Sources

This pattern is useful for more complex pipelines, especially when you need to:

- Decouple Logic: You can declare all your tables at the top of your pipeline script and define the population logic later, which can improve readability.

- Combine Multiple Sources: This is the primary use case. If you need to populate a single target table from multiple different source streams, you can’t use a single

AS SELECTstatement. Instead, you declare the empty target table once and then use multipleINSERT INTOstatements to feed data into it from your various sources.

Example Workflow

Imagine you have two different sources of events, logins and signups, and you want to combine them into a single all_events table.

Step 1: Declare the Empty Target Table

First, you define the schema for your final, combined table.

CREATE STREAMING LIVE TABLE all_events (

event_timestamp TIMESTAMP,

event_type STRING,

user_id STRING

);Step 2: Define Source 1 and Insert into the Target

Define your first source stream and use INSERT INTO to populate the all_events table.

-- This could be a temporary DLT table just for this logic

CREATE TEMPORARY STREAMING LIVE TABLE raw_logins AS

SELECT * FROM STREAM cloud_files("/path/to/logins", "json");

-- Insert the data into the main table

INSERT INTO LIVE.all_events

SELECT timestamp, "LOGIN" AS event_type, userId FROM STREAM(LIVE.raw_logins);Step 3: Define Source 2 and Insert into the Target

Define your second source stream and use another INSERT INTO statement targeting the same all_events table.

CREATE TEMPORARY STREAMING LIVE TABLE raw_signups AS

SELECT * FROM STREAM cloud_files("/path/to/signups", "json");

INSERT INTO LIVE.all_events

SELECT timestamp, "SIGNUP" AS event_type, userId FROM STREAM(LIVE.raw_signups);In this pipeline, DLT understands that all_events has two upstream dependencies and will process data from both raw_logins and raw_signups into the final table.

Orchestrating Workloads with Databricks Jobs

A Databricks Job is a way to run non-interactive code, such as a notebook or script, on a schedule or on-demand. Jobs are the primary tool for productionalizing data engineering workloads, moving from interactive development to automated, reliable execution. A job consists of one or more tasks and runs on a specified compute cluster.

Building Multi-Task Jobs

While a single-task job is useful, the true power of the orchestrator comes from creating multi-task jobs. You can define dependencies between tasks to build complex workflows that are managed automatically.

Defining Task Dependencies

By defining dependencies, you create a Directed Acyclic Graph (DAG) where a task can be set to run only after its upstream tasks have succeeded. This allows you to build robust, multi-step pipelines directly within the Jobs UI.

A Visual Example: A Multi-Task DAG

This diagram illustrates a common ETL pipeline structured as a multi-task job. The “Process Aggregates” and “Run Data Quality Checks” tasks run in parallel after the “Process Silver” task succeeds.

Code snippet

+-------------------+

| Ingest Raw Data |

| (Bronze Task) |

+---------+---------+

|

| (On Success)

▼

+---------+---------+

| Process Silver |

| (Silver Task) |

+---------+---------+

|

+--------------+---------------+

| (On Success) | (On Success)

▼ ▼

+-----------+---------+ +----------+----------+

| Process Aggregates | | Run Data Quality |

| (Gold Task) | | Checks (QA Task) |

+---------------------+ +---------------------+Task Types

A job task can execute different types of code. Common types for data engineering include:

- Notebook: Runs a Databricks notebook.

- Python Script: Runs a Python (

.py) file. - Delta Live Tables pipeline: Triggers an update of a DLT pipeline.

- SQL: Runs a SQL file or a specific SQL query.

Key Job Configuration Options

Scheduling and Triggers

Jobs can be run manually or triggered automatically. The primary trigger types are:

- Schedule: Runs the job at specified times using CRON syntax.

- File Arrival: Triggers the job when new files arrive in a specified cloud storage location.

- Continuous: Runs the job continuously, immediately starting a new run after the previous one completes.

Compute Configuration

You can configure the compute resources that a job uses.

- Job Cluster: A cluster that is created just for this job run and is terminated immediately after the job completes. This is the most common and cost-effective approach for production jobs as it ensures a clean, isolated environment and you only pay for compute when the job is running.

- All-Purpose Cluster: An existing interactive cluster. Using an all-purpose cluster can reduce job start-up time, but the cluster runs continuously, incurring costs even when no jobs are running.

Job Parameters

You can pass parameters to jobs to make them reusable. For example, a single notebook can be used for a daily ETL job by passing the run date as a parameter, allowing the notebook to process data for that specific day.

Handling Failures and Rerunning Jobs

Robust failure handling is essential for production pipelines.

Failure Handling Configuration

When a task in a multi-task job fails, you can configure the job’s behavior.

- Stop all: This is the default. If one task fails, the entire job run is marked as failed and stopped.

- Retry Policies: You can configure a task to automatically retry a certain number of times if it fails, which can help overcome transient network or service issues.

Repair and Rerun

After a multi-task job fails, you do not have to rerun the entire workflow from the beginning. Databricks offers a Repair and Rerun feature.

When you trigger a repair run, the orchestrator intelligently inspects the previous failed run. It skips all tasks that completed successfully and starts a new run by executing only the failed tasks and all of their downstream dependent tasks. This saves significant time and compute cost, especially in long and complex pipelines.

Databricks SQL and SQL Warehouses

This chapter covers the components of the Databricks platform that are specifically designed to provide a best-in-class experience for SQL analytics and business intelligence (BI), rivaling traditional data warehouses but running directly on the lakehouse.

What is Databricks SQL?

Databricks SQL, often abbreviated as DBSQL, is a dedicated environment within the Databricks platform tailored for SQL-first users, such as data analysts and BI professionals. Its purpose is to provide a high-performance, intuitive SQL analytics experience directly on the data stored in Delta Lake.

The DBSQL environment consists of several key components:

- A SQL-focused UI that includes a powerful SQL editor, query history, results visualization, and dashboarding capabilities.

- Endpoints for connecting external BI tools like Tableau, Power BI, or Looker.

- A specialized compute resource known as a SQL Warehouse.

What are SQL Warehouses?

A SQL Warehouse is the compute resource used to run queries within the Databricks SQL environment. This is distinct from the all-purpose clusters used for data engineering or data science notebooks. SQL Warehouses are specifically optimized for running analytical SQL queries at high concurrency and low latency.

Key Features of SQL Warehouses

- Optimized for SQL: They run an engine that is highly optimized for SQL workloads, including the vectorized Photon engine, which provides significant performance gains over standard Spark.

- Instant and Elastic Compute: SQL Warehouses can start very quickly and can be configured to automatically scale up or down based on the query load. This means more clusters are added to handle high numbers of concurrent users, and they are shut down when demand decreases, balancing performance and cost.

- Multi-Cluster Load Balancing: A single SQL Warehouse endpoint can automatically distribute queries across multiple underlying compute clusters, ensuring high availability and performance for many simultaneous users.

- Serverless Option: Databricks offers a Serverless SQL Warehouse where the compute resources are fully managed by Databricks in its own cloud account. This provides instant startup and removes the need for customers to manage capacity or worry about idle clusters.

Types of SQL Warehouses

There are three main types, offering different levels of performance and management:

- Classic: The original type, running in the customer’s cloud account.

- Pro: Adds more performance features and is the standard for most use cases.

- Serverless: The fully managed, instant-on option.

How It All Fits Together

The typical workflow for a data analyst involves using a client to send a query to a SQL Warehouse, which then executes the query against the data in the lakehouse.

Code snippet

+-------------------+ +-------------------------+ +-------------------+

| BI Tool (Tableau) | | Databricks SQL UI | | User with SQL |

| | | (SQL Editor) | | Client |

+-------------------+ +-------------------------+ +-------------------+

| | |

+--------------------------+--------------------------+

|

▼

+---------------------------+

| SQL Warehouse Endpoint |

| (Optimized Compute) |

+---------------------------+

|

▼

+------------------------------+

| Delta Tables / Unity Catalog |

| (Data in the Lakehouse) |

+------------------------------+

In this model, an analyst writes a query in the DBSQL editor or connects a BI tool. The query is submitted to the SQL Warehouse endpoint. The warehouse then uses its optimized engine to execute the query against the Delta tables defined in Unity Catalog and returns the results to the analyst.

A Comprehensive Guide to Unity Catalog

Unity Catalog is Databricks’ unified and fine-grained governance solution for all data and AI assets on the lakehouse. It provides a centralized place to manage data access, auditing, lineage, and discovery across all of your Databricks workspaces.

The Unity Catalog Governance Model

Before vs. After Unity Catalog (The “Why”)

The introduction of Unity Catalog fundamentally changed how data is governed in Databricks.

Before (Hive Metastore):

Each workspace had its own isolated Hive Metastore. This meant a table defined in Workspace A was not visible in Workspace B, leading to data silos and inconsistent access policies.

+-------------------+ +-------------------+

| Workspace A | | Workspace B |

| +---------------+ | | +---------------+ |

| | HiveMetastore | | | | HiveMetastore | |

| +---------------+ | | +---------------+ |

+-------------------+ +-------------------+After (Unity Catalog):

A single Unity Catalog metastore is created at the account level and shared by all workspaces. This provides one central place to define data and manage permissions.

Code snippet

+-------------------+ +-------------------+

| Workspace A | | Workspace B |

+---------+---------+ +---------+---------+

| |

+-------------+------------+

|

▼

+-----------------------+

| Single Unity Catalog |

| Metastore |

+-----------------------+The 3-Level Namespace and Object Hierarchy

Unity Catalog organizes all data assets into a clear hierarchy. Permissions are granted on specific objects in this hierarchy, and those privileges are inherited by the child objects by default.

Code snippet

+---------------------+

| Metastore (Root) |

+----------+----------+

| (contains)

▼

+----------+----------+

| Catalog |

+----------+----------+

| (contains)

▼

+----------+----------+

| Schema |

| (Database) |

+----------+----------+

| (contains)

▼

+----------------+ +----------------+ +-----------------+

| Table | | View | | Function |

+----------------+ +----------------+ +-----------------+

The data object types are the containers in this hierarchy: the Metastore, Catalog, Schema, and finally the Tables, Views, and Functions.

The Security Model

Unity Catalog’s security model is centralized and based on standard ANSI SQL using GRANT and REVOKE commands. This provides a familiar and powerful way to manage access.

Identities and Roles

Identity Types

Permissions are granted to principals, which can be:

- Users: Individual human users.

- Service Principals: Identities for automated jobs and applications.

- Groups: The recommended way to manage permissions. Groups can contain users, service principals, and even other groups, as nesting is supported.

Identity Federation

Unity Catalog can be federated with your company’s identity provider (like Azure Active Directory or Okta), allowing you to manage users and groups in one central place.

Key Roles

- Metastore Admin: The superuser for the metastore. This role is highly privileged and can create catalogs and manage top-level permissions.

- Object Owners: The creator of an object (like a catalog or schema) becomes its owner and receives extensive privileges on it, including the ability to grant permissions to others.

Managing Privileges

Common Privilege Types

A privilege is a permission to perform a specific action on a data object. The most common are:

SELECT: Allows reading data from a table or view.MODIFY: Allows writing data to a table (INSERT,UPDATE,DELETE,MERGE).USAGE: Required on a parent object to access a child object (e.g., you needUSAGEon a schema to select from a table within it).CREATE TABLE: Allows creating new tables within a schema.EXECUTE: Allows running a user-defined function.

Privileges for File Access

Unity Catalog provides a secure layer to manage access to underlying cloud storage files.

- Storage Credentials and External Locations: A Storage Credential is an object that securely stores a cloud credential (e.g., an IAM Role). An External Location is a pointer that links a specific cloud storage path (like an S3 bucket) with a Storage Credential.

READ FILESandWRITE FILES: These privileges are granted on External Locations. They allow users to bypass table-level governance and interact with files directly, which is necessary for ingestion tools like Auto Loader.- Is

ANY FILEsupported by Unity Catalog?: No. The legacyANY FILEprivilege is not used in Unity Catalog. Its functionality is replaced by the more granularREAD FILESandWRITE FILESprivileges on specific external locations.

Managing Permissions with SQL

Unity Catalog permissions are managed using simple Data Control Language (DCL) commands.

GRANT

The GRANT command gives a privilege on an object to a principal.

-- Grant read access on a table to the 'analysts' group

GRANT SELECT ON TABLE main.reporting.sales_summary TO `analysts`;

-- The group also needs USAGE on the parent objects

GRANT USAGE ON CATALOG main TO `analysts`;

GRANT USAGE ON SCHEMA main.reporting TO `analysts`;SHOW GRANTS

This command shows what privileges have been assigned.

SHOW GRANTS ON TABLE main.reporting.sales_summary;REVOKE

This command takes away a previously granted privilege.

REVOKE SELECT ON TABLE main.reporting.sales_summary FROM `analysts`;DENY

This command explicitly forbids a privilege and always takes precedence over any GRANT.

DENY MODIFY ON TABLE main.gold.customer_pii TO `intern_group`;Advanced Features and Migration

Data Sharing with Delta Sharing

Unity Catalog powers Delta Sharing, an open protocol for sharing data with external organizations. You bundle tables into a Share and grant access to a Recipient.

Built-in Governance and Observability

- Data Search and Discovery: The Databricks UI includes a global search bar to discover all data assets registered in Unity Catalog.

- Automated Lineage: Unity Catalog automatically captures and visualizes data lineage down to the column level for all queries, providing a clear map of how data flows through your lakehouse.

Migration and Coexistence

- Combining Hive Metastore with Unity Catalog: You can still query tables from a workspace’s legacy Hive Metastore by using the special

hive_metastorecatalog (e.g.,SELECT * FROM hive_metastore.default.my_legacy_table;). - Is Migration Required?: While not strictly mandatory to use Databricks, migrating to Unity Catalog is the highly recommended best practice. All new and advanced Databricks data governance and security features are built exclusively for Unity Catalog.