Table of Contents

As a Kafka consultant, I’m often asked how we can be 100% sure a complex streaming application works as expected. My answer has increasingly pointed to one platform: Databricks. Testing a distributed system like Kafka is tough. You can’t just rely on unit tests and logs; you need to see the entire data flow from start to finish. Databricks provides the perfect environment for this high-level, data-driven validation.

Here’s why it’s become a cornerstone of my testing toolkit:

- Unified Platform: It seamlessly combines a data lakehouse (for sinking your Kafka topics) with a powerful Spark engine (for analysis). No more duct-taping different systems together.

- Massive Scalability: Need to analyze a full day’s worth of production data? Databricks lets you spin up a massive cluster to query terabytes in minutes and then shut it down, making large-scale testing cost-effective.

- Language Flexibility: Your team can work in SQL, Python, or Scala. This means data analysts can verify business logic with SQL while engineers can write more complex validation scripts in Python, all in the same collaborative notebook.

Our Databricks-Powered Testing Architecture

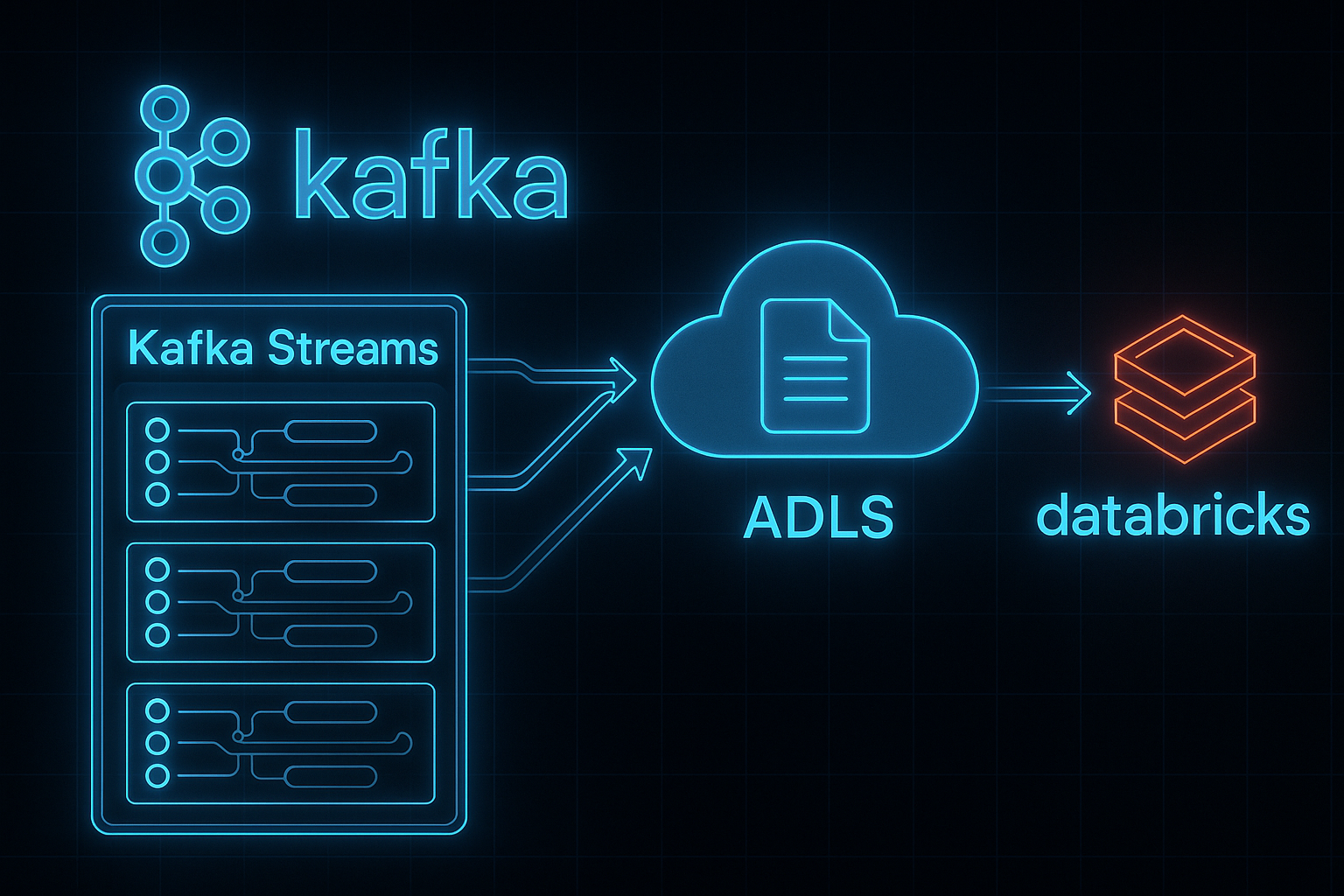

For a recent client with a multi-topology Kafka Streams app, we built a simple but powerful validation architecture with Databricks at its core. The application consumed from multiple Avro topics and produced to others, and we needed to prove its logic was flawless.

We used Kafka Connect to sink all input and output topics to our Azure Data Lake (ADLS). From there, Databricks took over as the central analysis hub.

Input Topic (Avro) ---> [Dedupe App] ---> Output Topic (Avro)

| |

v v

[Kafka Connector] [Kafka Connector]

| |

v v

ADLS (as Avro) ADLS (as Avro)

| |

+------------------+---------------------+

|

v

[Databricks]

|

v

[Spark SQL Analysis]

|

v

Validation

The Playbook: From Raw Avro to Insight with Databricks SQL

Here’s how we used Databricks to validate a critical requirement: ensuring an output topic was perfectly free of duplicates.

Step 1: Create Views Directly on Raw Data

This is where Databricks shines. We didn’t need a complex ETL process to analyze our Kafka data. We used a single Spark SQL command to create a queryable view directly on top of the raw Avro files sitting in our data lake.

-- Databricks understands how to read the Avro format right out of the box!

CREATE OR REPLACE TEMP VIEW output_view_X AS

SELECT

*

FROM

avro.`abfss://[email protected]/path/to/output_topic_X/date=2025-07-11`Step 2: Run a Simple, Powerful Query

With our view created, verifying uniqueness becomes a trivial, readable SQL query. The power of the Spark engine underneath handles the heavy lifting of scanning all the data.

-- Find any duplicates that might have slipped through

SELECT

eventId,

COUNT(*) as record_count

FROM

output_view_X

GROUP BY

eventId

HAVING

record_count > 1An empty result from this query gives us immediate, data-driven proof that our application’s deduplication logic for that topology is working perfectly.

Going Deeper: Advanced Validation with Databricks

Because Databricks gives us easy access to both the input and output topic data, we can perform much more sophisticated validations than just checking for duplicates.

Data Loss Verification

By using a LEFT ANTI JOIN between an input view and an output view, we can instantly identify any records that were consumed but never produced. Databricks’ ability to join massive datasets makes this check feasible.

End-to-End Latency

How long does a message really take to get through your system? By joining the input and output views on a message key, we can calculate the timestamp difference for every single event and get a true latency distribution, not just an estimate.

Complex Transformation Logic

If your app does more than just filter—say, it enriches events with new data—you can validate that logic at scale. A simple JOIN can compare the “before” and “after” versions of each event to ensure the transformation was applied correctly every time.

In short, Databricks allows you to treat your entire stream processing logic as a function that can be tested against a complete set of inputs and outputs, providing a level of confidence that’s otherwise hard to achieve.